We're building open-source technology for local-first models, enabling personalized software experiences without sacrificing accessibility or privacy. We believe identity and memory are two sides of the same coin, and Tiles makes that coin yours: your user-agent. Our first product is an on-device memory management solution for privacy-conscious users, paired with an SDK that empowers developers to securely access user memory and customize agent experiences.

Philosophy

Our goal with Tiles is to co-design both fine-tuned models and the underlying infrastructure and developer tooling to maximize efficiency in local and offline systems for inference and training.

The project is defined by four interdependent design choices¹:

- Device-anchored identity with keyless ops: Clients are provisioned through the device keychain and cannot access the registry by identity alone². Keyless operations are only enabled after an identity is verified and linked to the device key, allowing third-party agent access under user-defined policies³.

- Immutable model builds: Every build is version-locked and reproducible, ensuring consistency and reliability across updates and platforms.

- Content-hashed model layers: Models are stored and referenced by cryptographic hashes of their layers, guaranteeing integrity and enabling efficient deduplication and sharing.

- Verifiable transparency and attestations: Every signing and build event is logged in an append-only transparency log, producing cryptographic attestations that can be independently verified. This ensures accountability, prevents hidden modifications, and provides an auditable history of model provenance across devices and registries.

Implementation

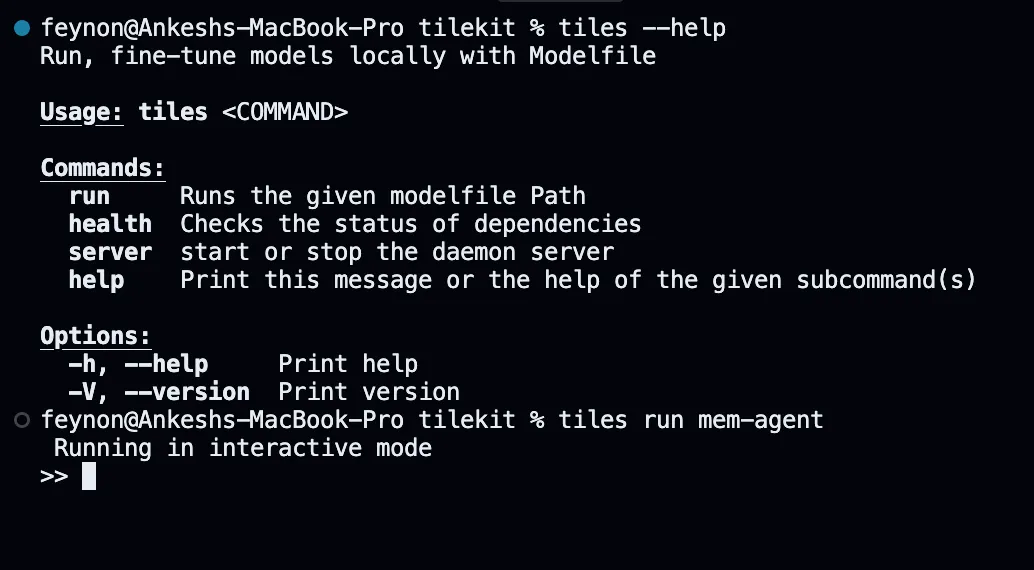

Our first alpha is a CLI assistant app for Apple Silicon devices, complemented by a Modelfile⁴ based SDK that lets developers customize local models and agent experiences within Tiles. We aim to evolve Modelfile in collaboration with the community, establishing it as the standard for model customization.

Tiles bundles a fine-tuned model to manage context and memories locally on-device with hyperlinked markdown files. Currently, we use mem-agent model (from Dria, based on qwen3-4B-thinking-2507), and are in the process of training our initial in-house memory models.

These models utilize a human-readable external memory stored as markdown, and learned policies (trained via reinforcement learning on synthetically generated data) to decide when to call Python functions that retrieve, update, or clarify memory, allowing the assistant to maintain and refine persistent knowledge across sessions.

Looking forward

We're building the next layer of private personalization: customizable memory, private sync, verifiable identity, and a more open model ecosystem.

- Memory extensions: Add support for LoRA-based memory extensions so individuals and organizations can bring their own data and shape the assistant's behavior and tone on top of the base memory model.

- Sync: Build a reliable, peer-to-peer sync layer using Iroh for private, device-to-device state sharing.

- Identity: Ship a portable identity system using AT Protocol DIDs, designed for device-anchored trust.

- SDK and standards: Work with the Darkshapes team to support the MIR (Machine Intelligence Resource) naming scheme in our Modelfile implementation.

- Registry: Continue supporting Hugging Face, while designing a decentralized registry for versioned, composable model layers using the open-source xet-core client tech.

- Research roadmap: As part of our research on private software personalization infrastructure, we are investigating sparse memory finetuning, text diffusion models, Trusted Execution Environments (TEEs), and Per-Layer Embeddings (PLE) with offloading to flash storage.

We are seeking design partners for training workloads that align with our goal of ensuring a verifiable privacy perimeter. If you're interested, please reach out to us at hello@tiles.run.